After more than 40 years of operation, DTVE is closing its doors and our website will no longer be updated daily. Thank you for all of your support.

Broadcasting in the age of AI

Artificial intelligence is already having a profound effect on the media and TV space, but what applications hold most promise and what challenges need to be overcome? Andy McDonald reports.

Artificial intelligence is already having a profound effect on the media and TV space, but what applications hold most promise and what challenges need to be overcome? Andy McDonald reports.

Artificial intelligence (AI) and machine learning (ML) will have a profound impact on businesses and working practices n the coming years, with the TV and broadcasting space no exception.

The technology is already being used to analyse and understand video content in an automated and efficient way. While this can help speed up processes like searching for content, putting together video clips, and distributing it to the right places, how far this will encroach into the creative process of making programming and content is still to be seen.

AI can also help operators and broadcasters to gain a deeper understanding of their audiences, which can bring a range of benefits – from personalised recommendations and targeted offers to emotional response measurement.

We talked to some of the leading technology providers in this space and look at how AI and ML is being deployed and look at the limitations that must be overcome for the technology to continue to evolve.

Data crunching

IBM has a series of products and services that are designed to understand and learn what is contained inside a video. IBM Watson Media brings IBM’s artificial intelligence offering to the media and entertainment industry and is designed to support content owners across the content lifecycle.

“Artificial intelligence is a game changer for the broadcasting industry,” says David Kulczar (right), senior offering manager, IBM Watson Media. “In a few short years, we’ve seen innovation across use cases like content creation, closed captioning, advanced metadata, and cognitive highlight clipping. As AI and machine-learning models continue to be refined, we’ll see more companies turn to AI as a foundational technology for their daily operations.”

“Artificial intelligence is a game changer for the broadcasting industry,” says David Kulczar (right), senior offering manager, IBM Watson Media. “In a few short years, we’ve seen innovation across use cases like content creation, closed captioning, advanced metadata, and cognitive highlight clipping. As AI and machine-learning models continue to be refined, we’ll see more companies turn to AI as a foundational technology for their daily operations.”

IBM Watson Media’s core offering is Video Enrichment, which automatically identifies complex content within a video to generate new, advanced metadata. This makes it easier for content owners to search their video libraries, deciding which content to match with advertisers, and reduces the time it takes to create highlights clips. The company recently partnered with Fox Sports to provide an AI-powered highlight experience for the 2018 World Cup and earlier this year created an interactive, AI-powered viewer experience for the 2018 Grammy awards.

IBM also recently launched Watson Captioning, a service that automatically captions live video programming like weather reports and breaking news, as well as on-demand content. This can be trained with location-specific data sets to make sure local language nuances like landmarks, sports teams and politicians are accurately captioned.

“IBM Watson Media’s biggest priority is enabling our customers to stay competitive in an increasingly crowded and fragmented media landscape,” says Kulczar. “We provide our partners with the tools they need to increase workflow efficiencies and enhance the viewer experience, all of which impact the bottom line.”

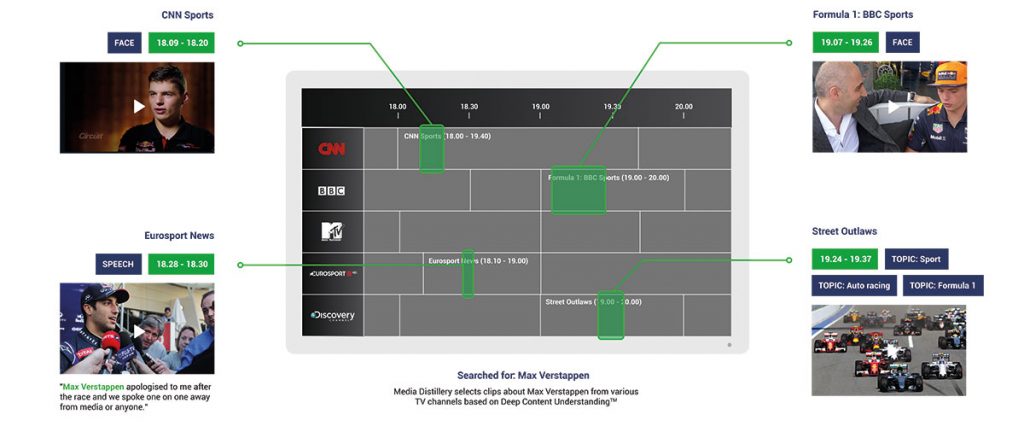

UK-based Media Distillery uses AI to analyse video for what it terms ‘deep content understanding’. Its technology is used by TV operators such as Telenet in Belgium and helps them to optimise the user experience in their replay environments. They can use Media Distillery to find out what is in their video content, who is on screen and when, what a programme is about, or when a programme started and ended.

“Our technology identifies a number of visual and audial aspects from video, such as speech, faces and objects. By combining these aspects, our technology even identifies the topic of the content,” says Media Distillery CEO, Roland Sars. “TV operators currently use our technology to solve the most pressing consumer frustrations in relation to watching television programs in a replay environment. These frustrations include missing out on the first couple of minutes of a programme because they were not recorded or having to sit through a few minutes of television advertisements before the programme finally starts because it started later than scheduled.”

Piksel’s vice-president of engineering Gerald Chao believes that there are unexplored search and discovery experiences that deep metadata can enable, “such as via facial recognition, emotional context, and plot analysis, to enhance how media would be discovered and consumed in the future”.

The company currently provides tech solutions to big-name companies like Liberty Global, Sky, OSN and Channel 4, and its AI and ML tools help editors to do their jobs more efficiently – for example by identifying duplicate records, erroneous data or metadata inconsistencies. Its AI can also extract deep metadata from assets that would traditionally require manual review and data entry.

Chao believes there is “plenty of headroom” for AI and ML to be used to improve operational efficiencies – like business intelligence, forecasting and cost modelling – and sees the opportunity for ML to optimise decision planning.

Content creation and distribution

Using AI to understand content is a powerful use of the technology, but machine learning also has the power to create and then distribute video to the end-viewer. “Currently, the video industry is experiencing its own sea change in the way video content is produced, distributed and consumed,” says TVU Networks CEO Paul Shen, who refers to the shift as ‘media 4.0’ – analogous with AI-led ‘industry 4.0’.

TVU’s MediaMind suite of solutions delivers its media 4.0 vision, and includes AI engines that recognise video content and automates its creation and distribution to specific platforms and devices – like smartphones, TVs and social media. In this process, TVU indexes video using metadata generated by MediaMind’s AI engine, while TVU Producer creates content for different platforms or audience groups.

“Media 4.0 is about enabling the mass production of content for individual audiences through the automation of video production and distribution. This helps media companies looking to produce more content without increasing their expenditures,” says Shen. He claims that TVU Mediamind “creates a new story-centric workflow, as opposed to a programme-centric one” and “opens the floodgates for how programs are produced and distributed”.

Over in Tel Aviv, a company called Minute has developed a video optimisation technology that automatically generates highlights from full-length videos. The company’s ‘smart video previews’ product generates what company CEO Amit Golan describes as “the most effective five to seven-second teasers of the most interesting portion of a video,” which replaces the traditionally static thumbnail that usually acts as a placeholder for a video.

“These highlights lead to increased click-through rates by an average of 300% and help to drive consumer engagement for publishers, which leads to additional advertising dollars,” claims Golan. He adds that during the World Cup this summer the technology was used by a partner to generate teasers and highlights in real time and resulted in “an increase of 13% of new users to the live stream”.

Wibbitz is an AI-powered video creation platform that uses patented technology to analyse and summarise text-based information into video storyboards. The company’s tech selects relevant media, including videos, images, GIFs and soundtracks from its licensed media library to match the main idea of the text, adding text styles, transitions, and branded elements to each scene of the video.

Wibbitz is an AI-powered video creation platform that uses patented technology to analyse and summarise text-based information into video storyboards. The company’s tech selects relevant media, including videos, images, GIFs and soundtracks from its licensed media library to match the main idea of the text, adding text styles, transitions, and branded elements to each scene of the video.

“Once our technology takes care of the heavy lifting in the video creation process, our users are able to add their own creative touch and get their video out into the world in just a couple of minutes,” says Wibbitz CEO Zohar Dayan. Although AI often carries the negative stigma that it could one day replace humans altogether, Dayan believes that the technology will be a complement rather than a replacement to content creators and storytellers.

Founded in 2011 with offices in NYC, Tel Aviv, and Paris, the company supports video creation for more than 400 publishers and brands, helping them to increase audience engagement and ad revenues across desktop, mobile, and social media. Its partners include Reuters, Forbes, Bloomberg, and The Weather Channel.

“By partnering with Wibbitz, The Weather Channel has been able to pair their hyper-localisation capabilities with our video creation technology, and redefine this experience on a digital screen through digital media network LocalNow,” says Dayan.

Elsewhere, Make.TV is integrating AI into its Live Video Cloud solution, which it will debut at IBC this year. The offering is designed to let broadcasters and publishers acquire, curate, route and distribute local live video sources from and to any destination. However, acquiring content is only the first step and Make.TV is also trying to help broadcasters and production teams identify the right feeds for their segments.

Make.TV CEO Andreas Jacobi says that in the near future he expects workflows to be able to integrate face and object recognition, enabling operators to automatically trigger graphics or switch between content – for example to follow a specific cyclist or car in a race, or automatically transfer segments to a different server.

In the longer-term, once AI and machine learning algorithms are properly trained, he says: “We imagine whole live streams being automatically generated based on pre-configured rules – the operator becomes the teacher, the AI/ML algorithm becomes the new operator.” Like Dayan he believes that AI will still need the guidance of a trained team to be able to work efficiently.

Know your audience

For the viewer, recommendations are a prominent example of AI in action. Underpinning this are broader efforts to understand viewer sentiment – something that a host of companies are helping operators and content providers with.

ThinkAnalytics has a background in personalised recommendations and uses AI and machine learning to power services like this and natural language voice discovery. AI is at the heart of ThinkAnalytics’ big data analytics platform for the TV space, which lets its clients analyse data in real time to help them boost engagement or drive decisions about content buying, pricing, and promotions.

ThinkAnalytics’ customer base includes the BBC, Liberty Global, Proximus, Cox, Rogers, Sky, and Swisscom. Earlier this year it also partnered with Indian pay TV and OTT provider Tata Sky to power personalised content recommendations across connected devices – initially on Tata Sky’s mobile and PC applications.

“We predict AI will have a huge impact on many various parts of the broadcast chain, including: natural language processing for voice and speech; content understanding on audio, video and subtitle processing; advertising placement; discovery and recommendations; and personalisation,” says ThinkAnalyics founder and CTO, Peter Docherty.

“The key priorities are expanding the product portfolio with further enhancements to our viewer analytics and audience insight solutions. These are two areas that complement our strong content discovery heritage and help customers to become more agile and better drive their business forward.”

Payments expert Paywizard recently launched an artificial intelligence-driven subscriber intelligence platform. Paywizard Singula is designed to let pay TV operators and OTT providers take a more data-driven approach to customer engagement by using subscriber insights and AI to reduce churn, grow average revenue per user and acquire new customers.

“The notion is that you can harness data to predict how likely a subscriber is to churn or how great their propensity to purchase a package is, but what makes Paywizard Singula so unique and powerful is that it allows operators to accurately identify the best action to take next in real time – in other words, determine what activity or interaction will work to keep that customer happy, on-board and positive about their experience,” says Paywizard CEO, Bhavesh Vaghela. “This action could be to offer a timely promotion, an informative communication, or even the decision to do nothing, depending on the recommendations provided within the Paywizard Singula platform.”

The Paywizard Singula launch comes shortly after the company partnered with the EPCC data science unit at the University of Edinburgh to develop advanced predictive models of consumer behaviour and automated processes, a move that will help develop Paywizard’s AI-driven capabilities. The initial project will see a team of data scientists working alongside Paywizard’s internal business intelligence team to deliver the new enhanced functionalities, with Paywizard looking to expand its internal team with University of Edinburgh graduates.

With a focus purely on sentiment, New York-based Canvs claims to be the industry standard for emotion measurement. The company uses patented semantic AI and machine learning systems to understand how people feel, why they feel that way, and the business impact that creates for brands, agencies, and media companies.

Canvs’ clients include Comcast, Fox, Turner and Netflix, and earlier this year NBCUniversal signed up to use the Canvs Surveys product to get a consistent and accurate read on open-ended survey responses. The NBC research department also uses Canvs for pilot testing. Canvs CEO Jared Feldman claims that it helped to cut the time it takes NBC to sort out survey responses from 16-hours to one, while providing “never-before-seen normative insights across pilots”.

“Ninety percent of human decisions are based on emotion, and there is no trusted standard in quantifying emotional response at scale. Canvs is able to use its patented semantic AI to decode the unnatural language phenomenon,” says Feldman. “Put simply, Canvs uses AI to provide immediate emotional and topical analysis of words expressed online, via surveys, anywhere.”

Discussing the company’s main priorities, Feldman says that he wants to help ensure that Canvs’ broadcast partners use it daily to create research efficiencies, unlock marketing opportunities and increase revenue. “Our biggest challenge is where to focus because Canvs is applicable to so many markets beyond media,” he says. “If Canvs can continue to be the enabling agent that transforms emotion insights into business-critical media decisions, the sky is the limit for our growth”.

In April of this year, US social entertainment company Fullscreen acquired influencer marketing platform Reelio in a move designed to make the influencer marketing process “automated, scalable, and streamlined from start to finish”.

Reelio’s AI platform identifies which creators would work well with brands based on the audiences that they share and the relevance of content to the advertiser. It also predicts the return on investment a brand might see when working with influencers across a set of social media platforms and formats and suggests how to optimally allocate budgets.

“Consumers have had a very tenuous relationship with advertising over the last few decades, to put it mildly,” says Reelio CEO Pete Borum. “Our mission is to transition the world away from advertising that is interruptive and annoying, to unintrusive branded content that entertains, educates and inspires – all while driving real and measurable ROI to advertisers.”

Learnings and limitations

While the existing benefits and the further potential of AI and ML for the broadcast and media industries seem clear, there are still challenges that must be overcome.

Shen at TVU argues that the greatest of these is the perception that AI-led solutions will be much more powerful in a year from now, meaning that AI is “pretty much only deployed today if it’s both the only solution for a task and that task absolutely needs to be accomplished.” However, he believes that the time when AI will be the first-choice solution is “quite some time in the future – but probably not as far away as most people think.”

Docherty at ThinkAnalytics describes the biggest issues surrounding AI as not the technology itself, but making sure that you have good data to work from. He says that it is easy to waste too much time getting data out of silos – data that must then be cleaned and transformed to avoid suffering ‘garbage in, garbage out’. At the same time, hiring skilled people to do such work presents another challenge.

The scope and scale of datasets is also cited as a challenge by Borum at Reelio. “Pre-acquisition, Reelio relied on a combination of very large, publicly available datasets, as well as smaller, proprietary datasets,” he explains. “Combining those two and leveraging them to train AI in a way that is unique and valuable is quite challenging. Now that we’re part of Fullscreen, the scale of the data we have access to is significantly larger and significantly more varied. This solves many of our previous challenges and simultaneously creates new ones.”

Minute’s CEO Amit Golan says that AI needs a defined question to get the right answer. If the question is vague or subjective, the insight gained might not be appropriate. Data sets also need to be big enough to yield good results, with a large amount of data or content on the same topic required for 100% accuracy.

Kulczar at IBM says that video is a complex medium with nuances that AI technology needs to be trained to understand – for example, crowd noise at a golf tournament will be very different to that at a football match, but both in their own context can indicate excitement levels.

“One aspect of the technology that is often overlooked is training. AI is only as good as the dataset it is trained on and creating an intelligent system requires the right information,” he says. “While the past two years have been critical for AI innovation, and the technology has proven to be a gamechanger for media, we are still in the early days of artificial intelligence.”

While predictions for an AI-powered ‘fourth industrial revolution’ seem to fall anywhere from automated disruption to full robot apocalypse, for the media industry there are certainly promising signs that AI will both help companies assemble and sort content and viewers consume it. How big a step-change this will create in the long-term for the TV sector is yet to be seen, or determined by an algorithm.