YouTube removes eight million videos in Q4 2017

YouTube removed more than eight million videos from YouTube in the fourth quarter of 2017, most of which were either spam or adult content.

The video site revealed the information in its first quarterly YouTube Community Guidelines Report, which it is producing as part of its efforts to clamp down on content that violates its policies.

The video site revealed the information in its first quarterly YouTube Community Guidelines Report, which it is producing as part of its efforts to clamp down on content that violates its policies.

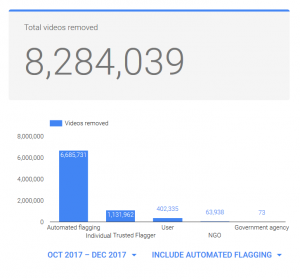

For the October-December period YouTube removed 8.28 million videos in total. Of these, 6.69 million were automatically flagged by computer for review.

Some 1.13 million of the removed videos were flagged by a human member of YouTube’s Trusted Flagger programme, while 402,000 were flagged by site users.

YouTube said that 75.9% of the removed videos were done so before they received any views, while more than half of the videos that it removed for violent extremism had fewer than 10 views.

“Machines are allowing us to flag content for review at scale, helping us remove millions of violative videos before they are ever viewed,” said YouTube in a blog post.

“Deploying machine learning actually means more people reviewing content, not fewer. Our systems rely on human review to assess whether content violates our policies.”

In December YouTube announced plans to grow its ‘trust and safety teams’ to 10,000 people in 2018 to clamp down on content that violates its policies.

In an open letter, YouTube CEO Susan Wojcicki said that in the last year YouTube has taken action to protect its community against violent or extremist content. Now it is applying the same lessons to tackle “other problematic content”.

In January the site then introduced stricter criteria for which channels can run ads. Previously, channels on YouTube simply had to reach 10,000 total views to be eligible for the YouTube Partner Program. Under the new rules channels need to have 1,000 subscribers and 4,000 hours of watch time within the past 12 months to be eligible.